KEVIN ROOSE

CHENNAI: For the past few days, I’ve been playing around with DALL-E 2, an app developed by the San Francisco company OpenAI that turns text descriptions into hyper-realistic images. OpenAI invited me to test DALL-E 2 (the name is a play on Pixar’s WALL-E and the artist Salvador Dalí) during its beta period, and I quickly got obsessed. I spent hours thinking up weird, funny and abstract prompts to feed the AI — “a 3-D rendering of a suburban home shaped like a croissant,” “an 1850s daguerreotype portrait of Kermit the Frog,” “a charcoal sketch of two penguins drinking wine in a Parisian bistro.” Within seconds, DALL-E 2 would spit out a handful of images depicting my request — often with jaw-dropping realism. One of the images DALL-E 2 produced was when I typed in “black-and-white vintage photograph of a 1920s mobster taking a selfie.” And it rendered my request for a high-quality photograph of “a sailboat knitted out of blue yarn.”

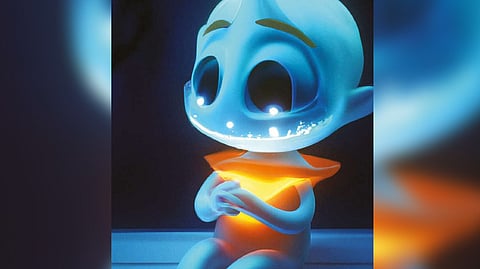

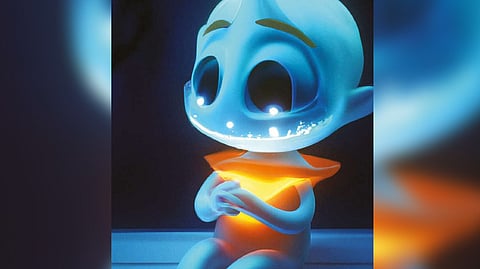

DALL-E 2 can also go more abstract. The illustration pictured in this article, for example, is what it generated when I asked for a rendering of “infinite joy.” (I liked this one so much I’m going to have it printed and framed for my wall.)

What’s impressive about DALL-E 2 isn’t just the art it generates. It’s how it generates art. These aren’t composites made out of existing internet images — they’re wholly new creations made through a complex AI process known as “diffusion,” which starts with a random series of pixels and refines it repeatedly until it matches a given text description. And it’s improving quickly — DALL-E 2’s images are four times as detailed as the images generated by the original DALL-E, which was introduced only last year.

DALL-E 2 got a lot of attention when it was announced this year, and rightfully so. It’s an impressive piece of technology with big implications for anyone who makes a living working with images — illustrators, graphic designers, photographers and so on. It also raises important questions about what all of this AI-generated art will be used for, and whether we need to worry about a surge in synthetic propaganda, hyper-realistic deepfakes or even non-consensual pornography.

But art is not the only area where artificial intelligence has been making major strides.

Over the past 10 years — a period some AI researchers have begun referring to as a “golden decade” — there’s been a wave of progress in many areas of AI research, fuelled by the rise of techniques like deep learning and the advent of specialised hardware for running huge, computationally intensive AI models. Some of that progress has been slow and steady — bigger models with more data and processing power behind them yielding slightly better results.

But other times, it feels more like the flick of a switch — impossible acts of magic suddenly becoming possible.

Just five years ago, for example, the biggest story in the AI world was AlphaGo, a deep learning model built by Google’s DeepMind that could beat the best humans in the world at the board game Go. Training an AI to win Go tournaments was a fun party trick, but it wasn’t exactly the kind of progress most people care about.

But last year, DeepMind’s AlphaFold — an AI system descended from the Go-playing one — did something truly profound. Using a deep neural network trained to predict the three-dimensional structures of proteins from their one-dimensional amino acid sequences, it essentially solved what’s known as the “protein-folding problem,” which had vexed molecular biologists for decades.

This summer, DeepMind announced that AlphaFold had made predictions for nearly all of the 200 million proteins known to exist — producing a treasure trove of data that will help medical researchers develop new drugs and vaccines for years to come. Last year, the journal Science recognised AlphaFold’s importance, naming it the biggest scientific breakthrough of the year.

Or look at what’s happening with AI-generated text. Only a few years ago, AI chatbots struggled even with rudimentary conversations — to say nothing of more difficult language-based tasks. But now, large language models like OpenAI’s GPT-3 are being used to write screenplays, compose marketing emails and develop video games. (I even used GPT-3 to write a book review for this paper last year — and, had I not clued in my editors beforehand, I doubt they would have suspected anything.)

AI is writing code, too — more than a million people have signed up to use GitHub’s Copilot, a tool released last year that helps programmers work faster by automatically finishing their code snippets.

Then there’s Google’s LaMDA, an AI model that made headlines a couple of months ago when Blake Lemoine, a senior Google engineer, was fired after claiming that it had become sentient.

Google disputed Lemoine’s claims, and lots of AI researchers have quibbled with his conclusions. But take out the sentience part, and a weaker version of his argument — that LaMDA and other state-of-the-art language models are becoming eerily good at having human-like text conversations — would not have raised nearly as many eyebrows. In fact, many experts will tell you that AI is getting better at lots of things these days — even in areas, such as language and reasoning, where it once seemed that humans had the upper hand.

“It feels like we’re going from spring to summer,” said Jack Clark, a co-chair of Stanford University’s annual AI Index Report. “In spring, you have these vague suggestions of progress, and little green shoots everywhere. Now, everything’s in bloom.”

In the past, AI progress was mostly obvious only to insiders who kept up with the latest research papers and conference presentations. But recently, Mr. Clark said, even laypeople can sense the difference. “You used to look at AI-generated language and say, ‘Wow, it kind of wrote a sentence,’” Mr. Clark said. “And now you’re looking at stuff that’s AI-generated and saying, ‘This is really funny, I’m enjoying reading this,’ or ‘I had no idea this was even generated by AI’”

There is still plenty of bad, broken AI out there, from racist chatbots to faulty automated driving systems that result in crashes and injury. And even when AI improves quickly, it often takes a while to filter down into products and services that people actually use. An AI breakthrough at Google or OpenAI today doesn’t mean that your Roomba will be able to write novels tomorrow.

But the best AI systems are now so capable — and improving at such fast rates — that the conversation in Silicon Valley is starting to shift. Fewer experts are confidently predicting that we have years or even decades to prepare for a wave of world-changing AI; many now believe that major changes are right around the corner, for better or worse.

Kevin Roose is a technology columnist with NYT©2022

The New York Times

Visit news.dtnext.in to explore our interactive epaper!

Download the DT Next app for more exciting features!

Click here for iOS

Click here for Android